Wget and Curl for Downloading Files in Linux – A Complete Guide

Wget and Curl are powerful command line tools for downloading or fetching files from the web. In a plain comparison, both tools are doing the same job. However, Wget is a simpler tool that only supports downloading, whereas Curl is a more flexible and multipurpose tool that supports downloading and uploading files.

Wget has a slight advantage over Curl as it is primarily built for downloading and mirroring websites, and it comes with an extra feature that resumes the interrupted download instead of restarting the download from the beginning. But Curl has superior support over a wide range of protocols and functionalities.

Table of Contents

Before starting the process, let’s first update and upgrade your system repositories by running the following commands in your terminal window:

sudo apt update sudo apt upgrade

Utilizing the Wget Command in Linux

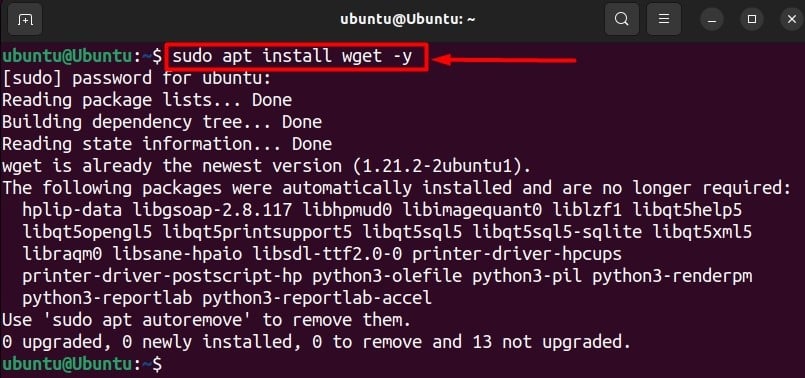

The Wget command can be manually installed on your Linux or Debian-based machine through the apt package manager by executing the following command:

sudo apt install wget -y

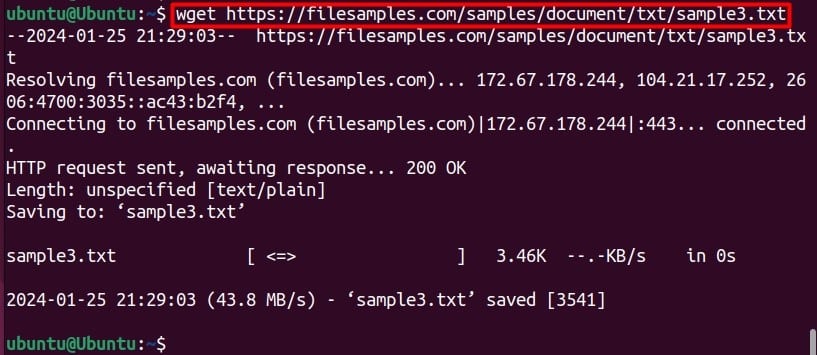

Download a Single File with Wget

To download a file with Wget, use the following command:

wget https://filesamples.com/samples/document/txt/sample3.txt

In the above command, you just have to input the URL for the file you want to download, and the Wget tool will fetch the file for you. The basic format for this command is as follows:

wget <url>

Note: Wget will download the files directly in the current directory as it treats it as the default directory.

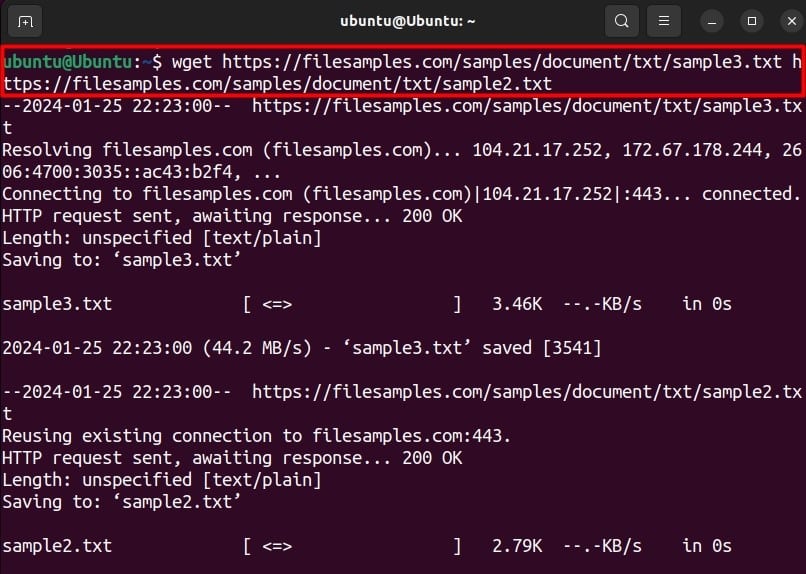

Download Multiple Files with Wget

Get can also be used to download multiple files simultaneously instead of one file at a time. To download multiple files, you can use the following command:

wget https://filesamples.com/samples/document/txt/sample3.txt https://filesamples.com/samples/document/txt/sample2.txt

The simpler form of this command looks like this:

wget <url> <url> . . . .

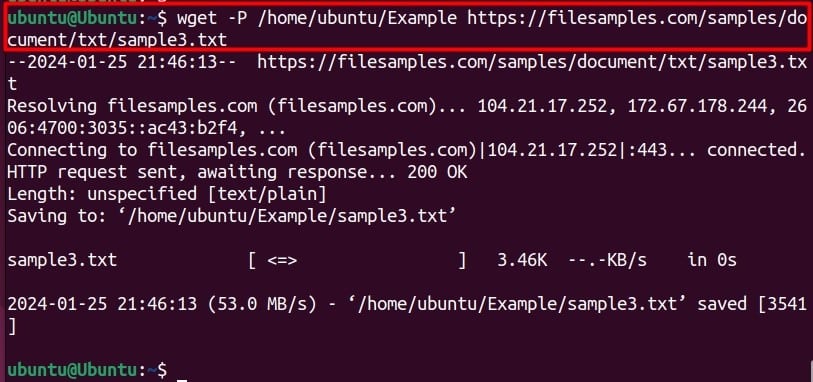

Download in a Specific Directory

To download a file in a specific directory other than the current directory, you can use the following command:

wget -P /home/ubuntu/Example https://filesamples.com/samples/document/txt/sample3.txt

In this command -P is specified as the directory prefix. After you have input the directory Path along with the file URL, the simpler form of this command will look like this:

wget -P <path> <url>

Download and Rename Files

Instead of changing a file’s name after downloading it, you can simply rename the file while downloading it and save it with the name of your choice. To do that, you can run this command in your terminal windows:

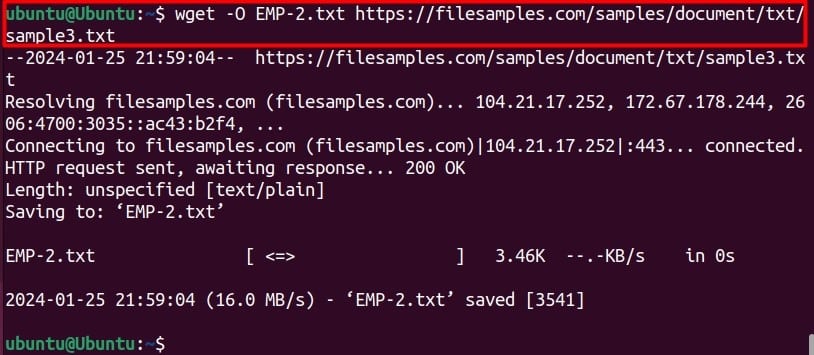

wget -O EMP-2.txt https://filesamples.com/samples/document/txt/sample3.txt

In the following command -O represents the output file after that, you can type a new Name for the file and its extension. Finally, input the URL to download the file. The simpler form of this command looks like this:

wget -O <File_name> <url>

Rename and Save in a Specific Directory

In case you are looking to download the file with a custom name and save it in a specific location, then you can use both tags and your command will look like this:

wget -O <file_name> -P <path> <url>

Downloading Files Recursively

The Wget tool is also used for downloading complete websites or web pages, for offline viewing, and mirroring. Websites are downloaded with all their additional files like images, styles, pages, and scripts. As links for these files are present in the HTML, Wget automatically downloads all the files and recreates the original directory structure as of the given URL.

To use the recursive download, you will be using the -r tag. The recursive method only fetches five levels of the website for the download. However, this can also be changed by adding a -l tag that represents levels. The command for recursive download with a specific level is as follows:

wget -r -l <depth> <url>

Download Files via FTP Using Wget

To download files using the FTP, you will require the FTP user’s admin username and password. The command for FTP download is as follows:

wget --ftp-user=<username> --ftp-password=<password> <url>

Resuming the Incomplete Downloads Using Wget

To resume a download from where you left off, you can use the -c tag in your command, which stands for Continue. The command to resume the download will look like this:

wget -c <url>

Affordable VPS Hosting With Dracula Servers

Dracula Servers offers high-performance server hosting at entry-level prices. The plans include Linux VPS, Sneaker Servers, Dedicated Servers & turnkey solutions. If you’re looking for quality self-managed servers with high amounts of RAM and storage, look no further.

Dracula Server Hosting is also Perfect for Hosting Telegram.Forex App with built-in support for MT4 with trade copier. Check the plans for yourself by clicking Here!

Utilizing the Curl Command in Linux

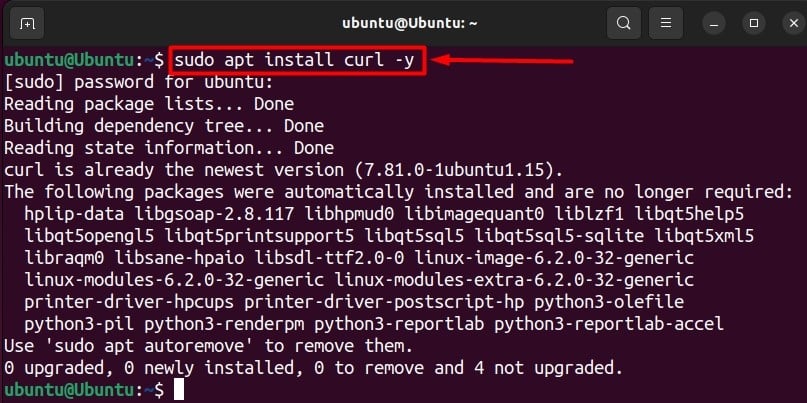

Let’s first ensure that the curl tool is installed on our system; use this command:

sudo apt install curl -y

Download a Single File with Curl

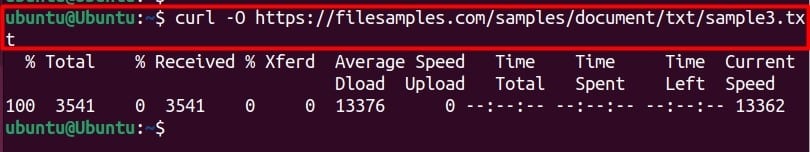

The curl command executes the file’s content in the terminal output. To download the file physically, you will need to use the -O flag that stands for remote name, and it will save our file in the current directory. The command to download is as follows:

curl -O https://filesamples.com/samples/document/txt/sample3.txt

The command without inputs will look like this:

curl -O <url>

Download Multiple Files with Curl

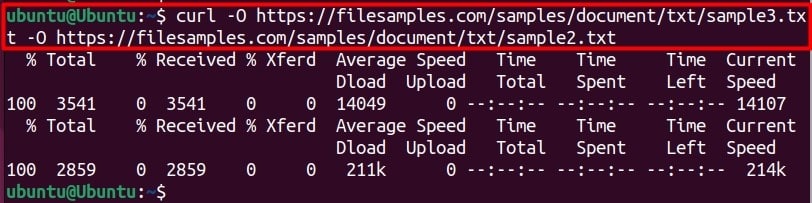

To download multiple files with curl, you can use the -o output tag before every URL.

curl -O https://filesamples.com/samples/document/txt/sample3.txt -O https://filesamples.com/samples/document/txt/sample2.txt

With every output tag, the system will automatically sort the outputs. The command without inputs is as follows:

curl -O <url> -O <url>

Download in a Specific Directory

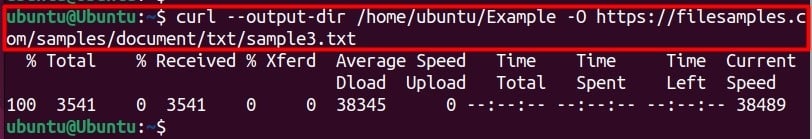

Instead of downloading in the current directory, you can specify the directory in which you want the file to be downloaded. To do that, you can use the following command:

curl --output-dir /home/ubuntu/Example -O https://filesamples.com/samples/document/txt/sample3.txt

The output-dir flag is used to define the saving path of the file; the command without inputs will look like this:

curl --output-dir <path> -O <url>

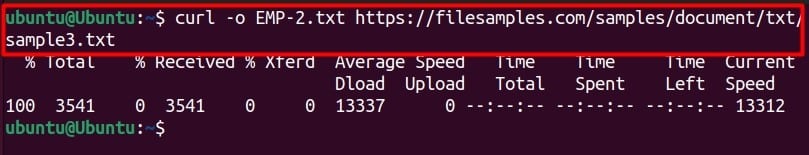

Download and Rename Files

You can assign a custom name to a file while downloading it to do so, you can use this command:

curl -o EMP-2.txt https://filesamples.com/samples/document/txt/sample3.txt

The output -o tag can also rename the file if the naming parameters are passed. The command without inputs will look like this:

curl -o <filename> <url>

Note: In curl “-o,” the output tag holds both renaming and specific directory options.

Download Files via FTP with Curl

You will need the admin username and password to download the FTP files. The command for this is as follows:

curl -u <username>:<password> -O <url>

In this command -u represents the user, where <username> and <password> and URL are the inputs.

Resuming the Incomplete Downloads Using Curl

To resume a download from where you have left, you can use the following command:

curl -C <offset> -O <url>

In this command, -C stands for continue and -O is for output, the offset is the value from where you want the download to restart, if the file size is lower than the offset, the system will start to download from the beginning so it is better to keep the offset empty and use – instead of size. This way, the system will automatically set the offset.

Differences Between Curl and wget Usage

In the world of command-line file retrieval, understanding the distinctions between curl and wget is essential. Here’s a breakdown of their usage and when to favor one over the other.

- Basic Command Syntax: Both

wgetandcurlshare a similar syntax for initiating downloads. The choice often comes down to personal preference or specific requirements. - Output to Console: When it comes to displaying downloaded content directly in the console,

wgettakes the lead, providing a seamless experience. In contrast,curlmight require the-ooption to save content to a file. - Downloading Multiple Files: For downloading multiple files,

wgetshines with its straightforward wildcard usage. On the other hand,curloffers flexibility through curly braces or ranges. - Protocols: While

wgetprimarily focused on HTTP/HTTPS and FTP,curlboasts an impressive array of supported protocols, making it a go-to for diverse network tasks - Recursive Download: Need to fetch files from a directory recursively?

wgetis your friend, featuring the-roption. However,curlit might require a bit more manual intervention or scripting. - Resume Download: Both tools allow you to resume interrupted downloads with

wgetutilizing the-coption andcurlemploying the-C -option. - User Interaction: For User interaction

wgetis often chosen for non-interactive scripts, whereascurlstands out for its versatility in interactive scenarios. - Better for Automation: For automated, non-interactive tasks,

wgetis a solid choice. Meanwhile,curlexcels in situations demanding scripting flexibility and control. - SSL Certificate Verification: In the realm of security,

wgetemploys SSL certificate verification by default, whilecurlrequires the-koption to disable this verification.

Conclusion

The Wget and the Curl command line tools are used to download files and packages from different websites and repositories. However, using these tools seems very daunting at first. But once you are familiar with their syntax, it becomes quite easy.

Both of these commands come with different options that make it easier to download a single or multiple files. Moreover, you can specify the directory where the file will be placed after the download and the file’s name once saved. However, one thing that people don’t know about is that both of these tools have the feature of resuming broken or incomplete downloads.

Check out More Linux Tutorials Here!

Subscribe

Login

0 Comments

Oldest